PydanticAI: Type-Safe LLM Outputs with Auto-Validation

Plus visualize ML model performance

Grab your coffee. Here are this week’s highlights.

📅 Today’s Picks

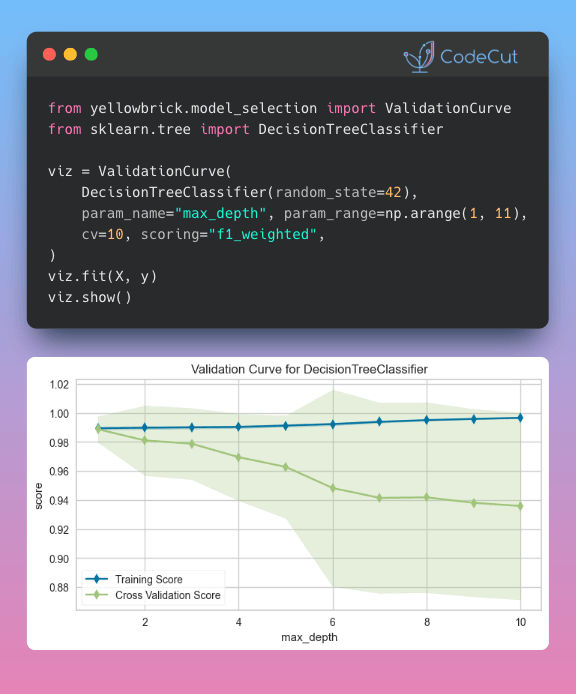

Yellowbrick: Detect Overfitting vs Underfitting Visually

Problem

Hyperparameter tuning requires finding the sweet spot between underfitting (model too simple) and overfitting (model memorizes training data).

You could write the loop, run cross-validation for each value, collect scores, and format the plot yourself. But that’s boilerplate you’ll repeat across projects.

Solution

Yellowbrick is a machine learning visualization library built for exactly this.

Its ValidationCurve shows you what’s working, what’s not, and what to fix next without the boilerplate or inconsistent formatting.

How to read the plot in this example:

Training score (blue) stays high as max_depth increases

Validation score (green) drops after depth 4

The growing gap means the model memorizes training data but fails on new data

Action: Pick max_depth around 3-4 where validation score peaks before the gap widens.

📖 View the full article | 🧪 Run code | ⭐ View GitHub

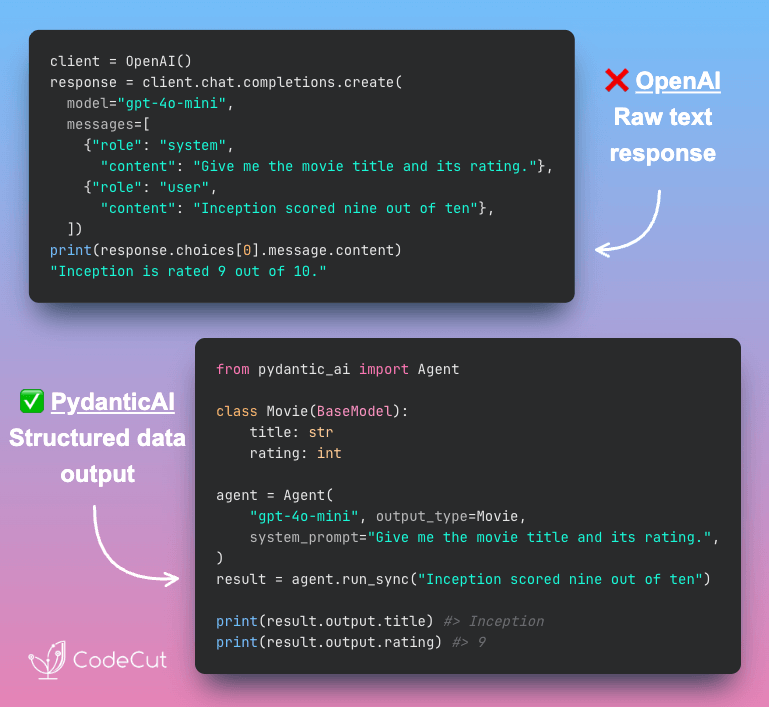

PydanticAI: Type-Safe LLM Outputs with Auto-Validation

Problem

Without structured outputs, you’re working with raw text that might not match your expected format.

Unexpected responses, missing fields, or wrong data types can cause errors that are easy to miss during development.

Solution

PydanticAI uses Pydantic models to automatically validate and structure LLM responses.

Key benefits:

Type safety at runtime with validated Python objects

Automatic retry on validation failures

Direct field access without manual parsing

Integration with existing Pydantic workflows

LangChain works too, but PydanticAI is a lighter alternative when you just need structured outputs.

📖 View the full article | 🧪 Run code | ⭐ View GitHub

☕️ Weekly Finds

pdfplumber [Data Processing] - Plumb a PDF for detailed information about each char, rectangle, line, et cetera - and easily extract text and tables.

cognee [LLM] - Memory for AI Agents in 6 lines of code - transforms data into knowledge graphs for persistent, scalable AI memory.

featuretools [ML] - An open source Python library for automated feature engineering from relational and temporal datasets.

📚 Top 5 Articles of 2025

A Deep Dive into DuckDB for Data Scientists - Query billions of rows on your laptop with DuckDB. Learn SQL analytics, Parquet integration, and when to choose DuckDB over pandas.

Top 6 Python Libraries for Visualization: Which One to Use? - Compare Matplotlib, Seaborn, Plotly, Altair, Bokeh, and PyGWalker. Find the right visualization library for your data science workflow.

Transform Any PDF into Searchable AI Data with Docling - Extract text, tables, and structure from PDFs for RAG pipelines. Docling handles complex layouts that break traditional parsers.

Narwhals: Unified DataFrame Functions for pandas, Polars, and PySpark - Write DataFrame code once, run it on pandas, Polars, or PySpark. Narwhals provides a unified API without vendor lock-in.

Goodbye Pip and Poetry. Why UV Might Be All You Need - Replace pip, virtualenv, pyenv, and Poetry with one tool. UV handles Python versions, dependencies, and reproducible builds in a single workflow.

Before You Go

🔍 Explore More on CodeCut

Tool Selector - Discover 70+ Python tools for AI and data science

Production Ready Data Science - A practical book for taking projects from prototype to production

⭐ Rate Your Experience

How would you rate your newsletter experience? Share your feedback →